7/12 - Update: “Dear Mama” Emmy nomination for Best Documentary or Nonfiction Series

Well, if you are my age and grew up in the ’90s, you listened to Tupac. Even all of us white boys from Camarillo knew every word to every song. His music really was the soundtrack to the last decade of the millennium and my youth.

Tonight is the final installment of “Dear Mama.” FX’s most-watched unscripted show.

Perfect for a Mother’s Day weekend! Please go check it out on FX tonight or streaming on Hulu.

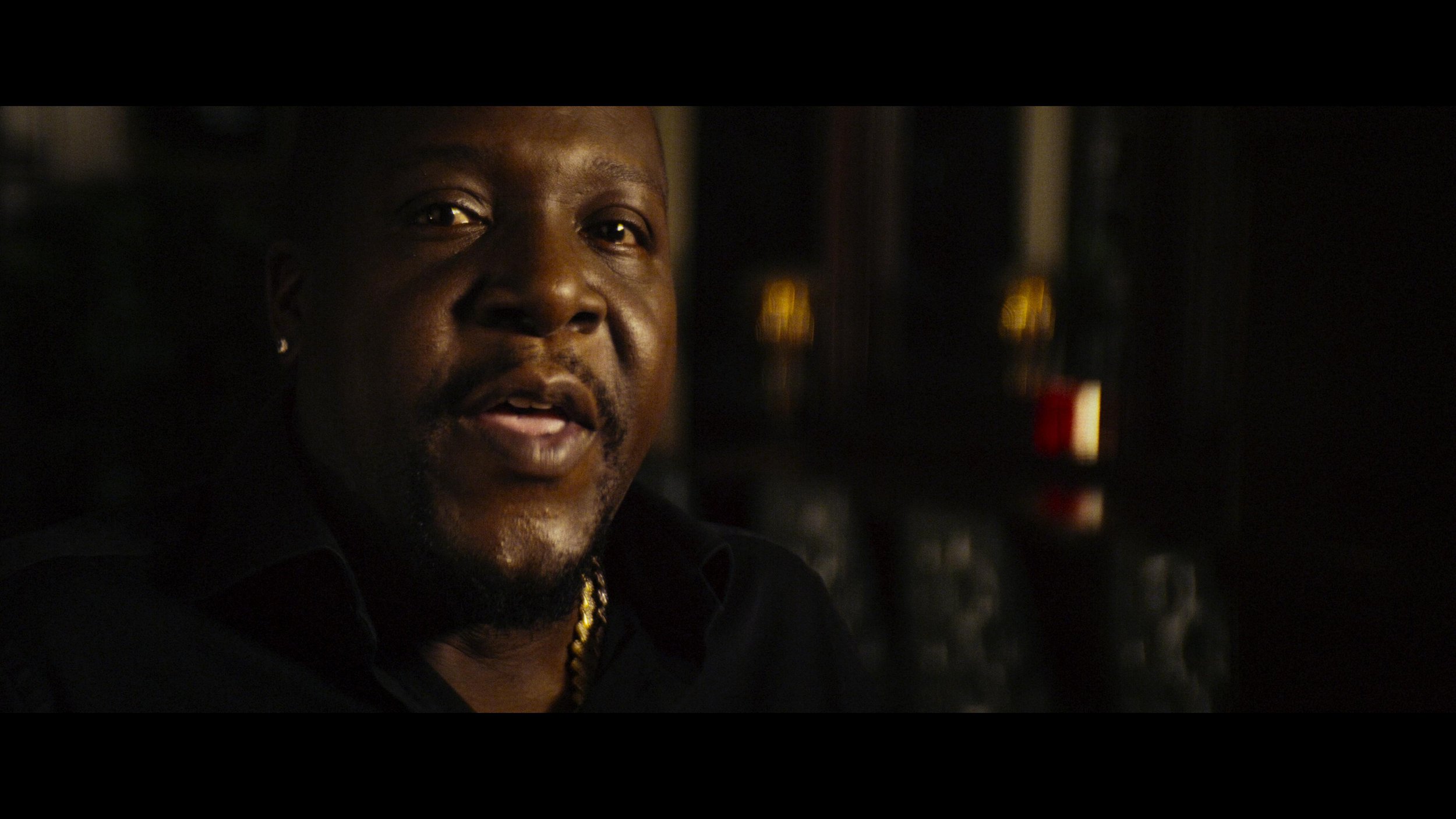

Allen Hughes directed this insightful docuseries. Fitting because Allen and his brother directed Tupac’s early music videos. Sure, there was a bit of drama, but that adds to the flavor of the story. That connection to the material made Hughes the quintessential choice for captaining this ship. Tupac wasn’t any one thing; more like an eclectic stew of many influences and identities. One thing is for sure. Dude was thug life for real.

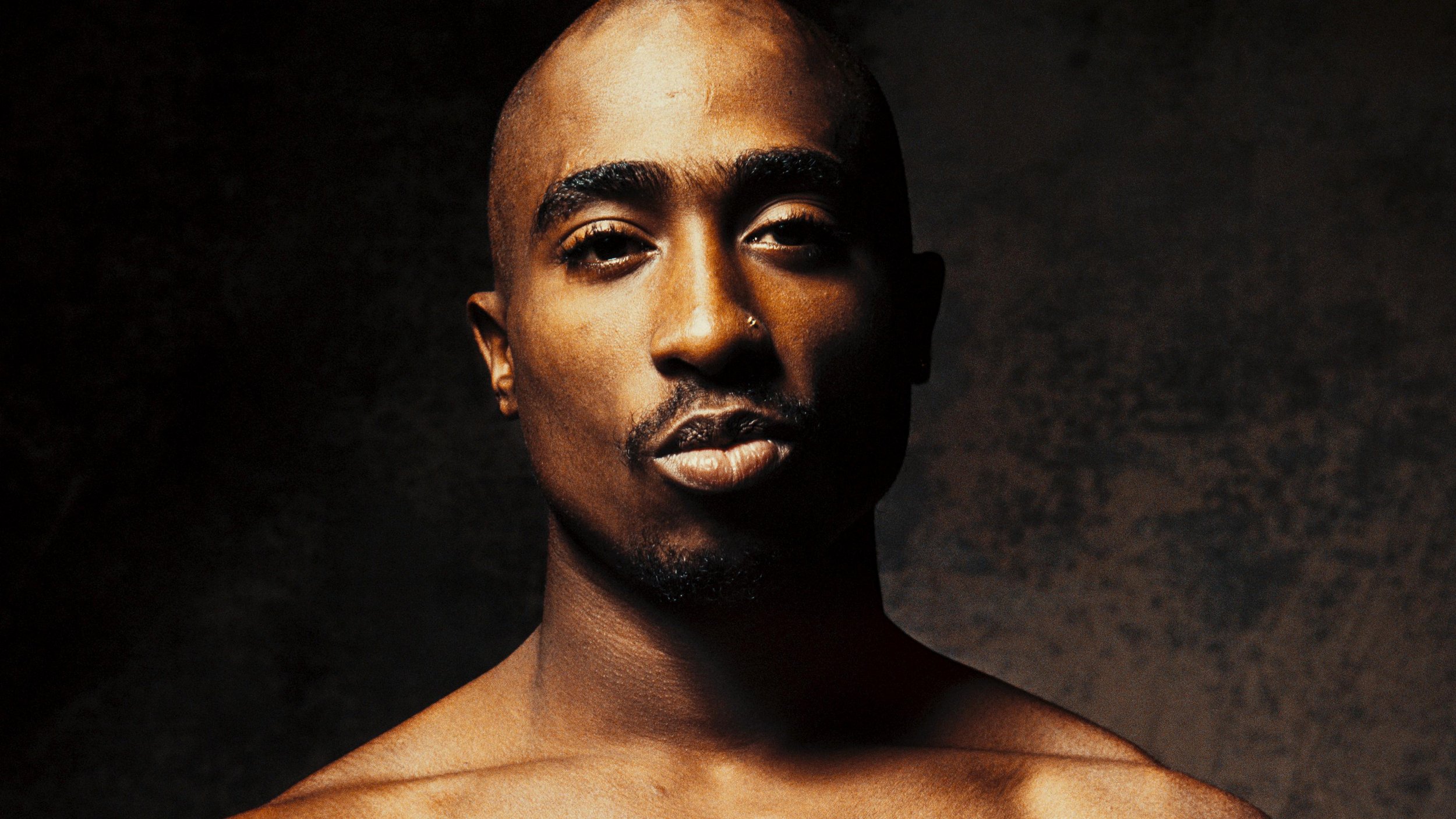

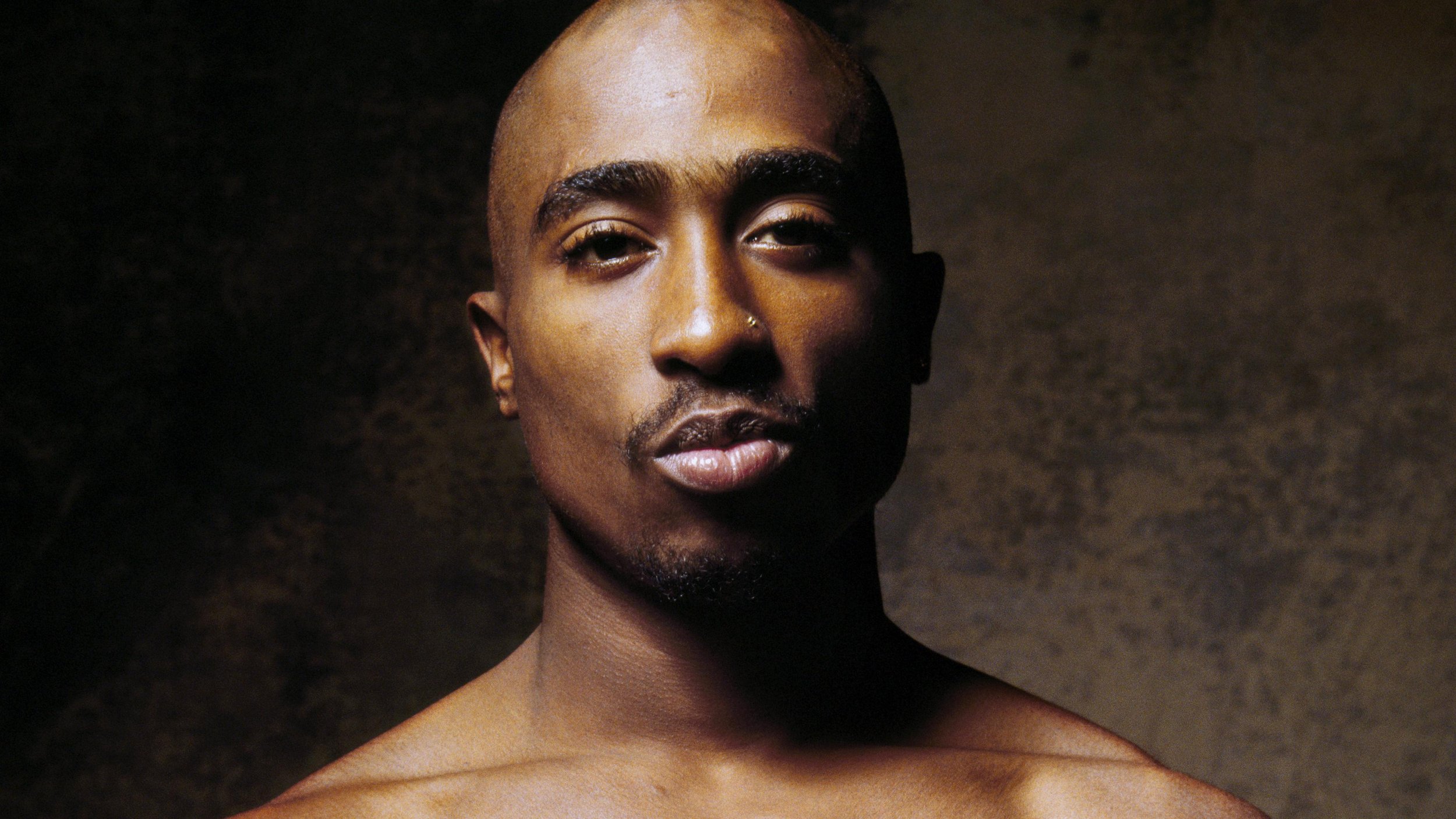

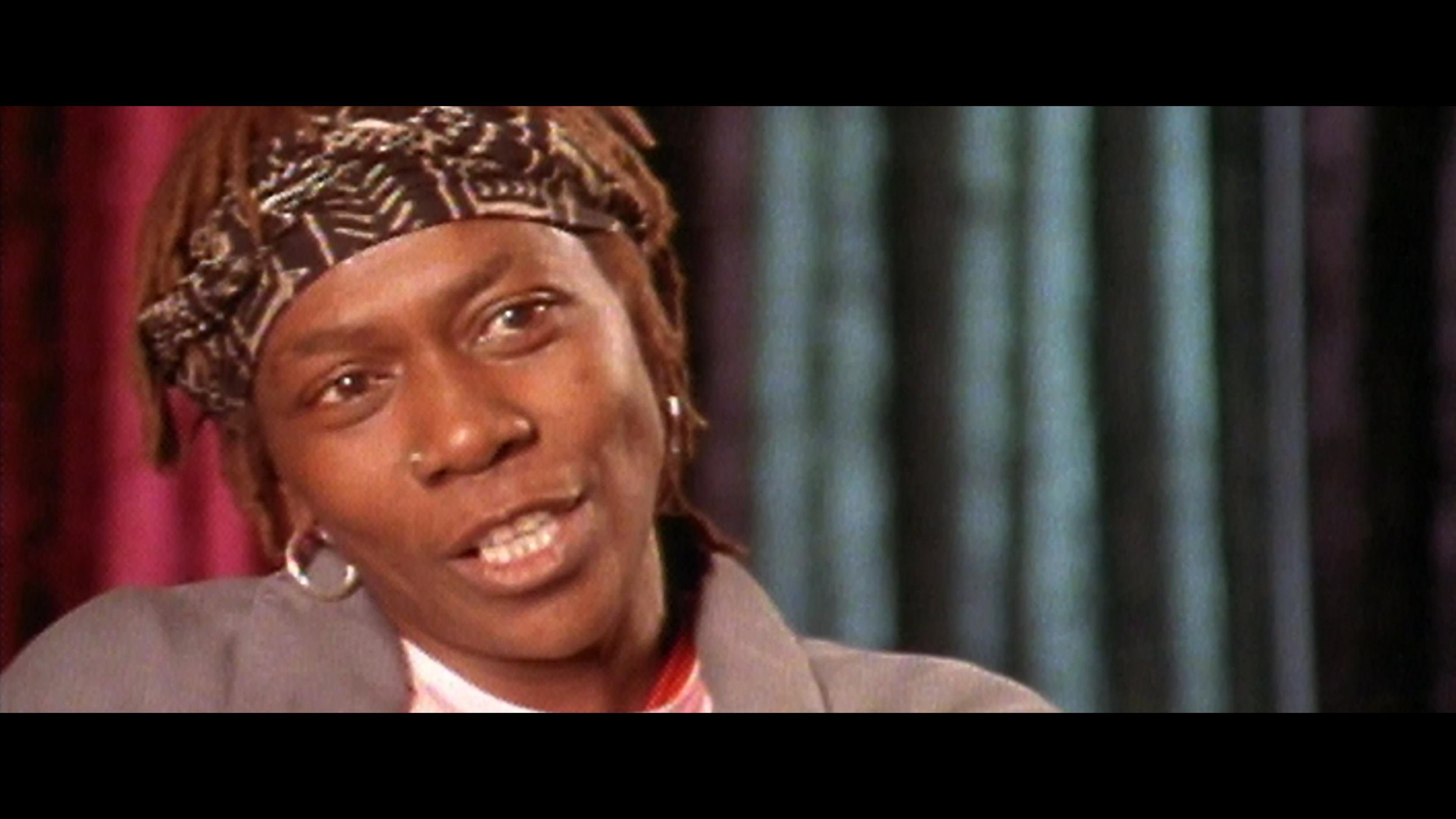

Cognac hues or Hughes as it were

Allen was clear on the look and vibe he wanted for the series. Cognac was the word. We spent a couple of weeks developing a look that feels like you have filtered the light through a fine liquor. We also used Live Grain to achieve that end-of-the-film-era perfect Kodak grain structure of the 90s.

Documentary grading is an entirely different beast. Here are a few tips for you to tackle your next interview-based production.

Color management - I preach this a lot, but even more critical with many different sources.

Sounds basic, but group your interviews.

Normalize the frame rate upfront.

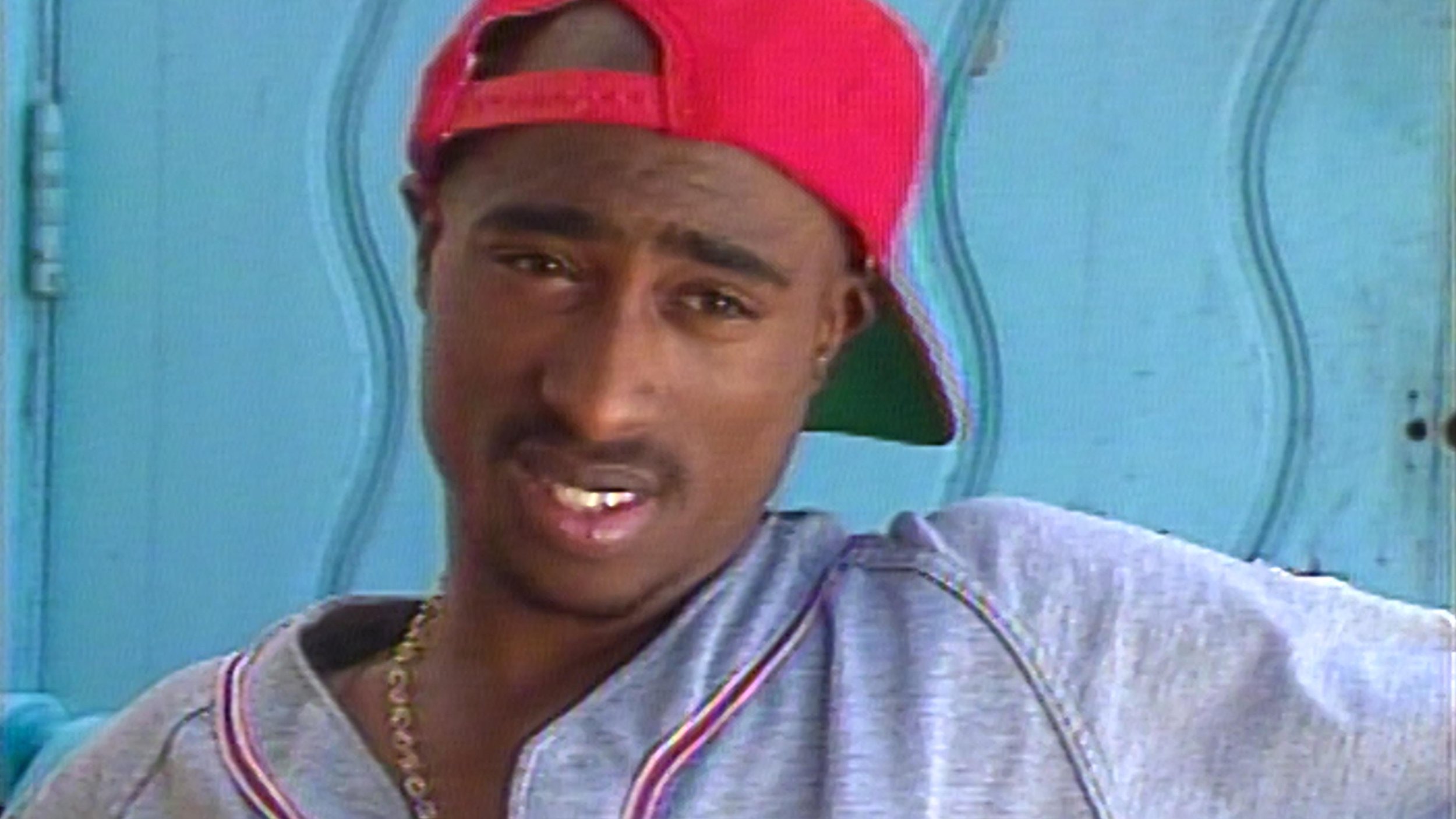

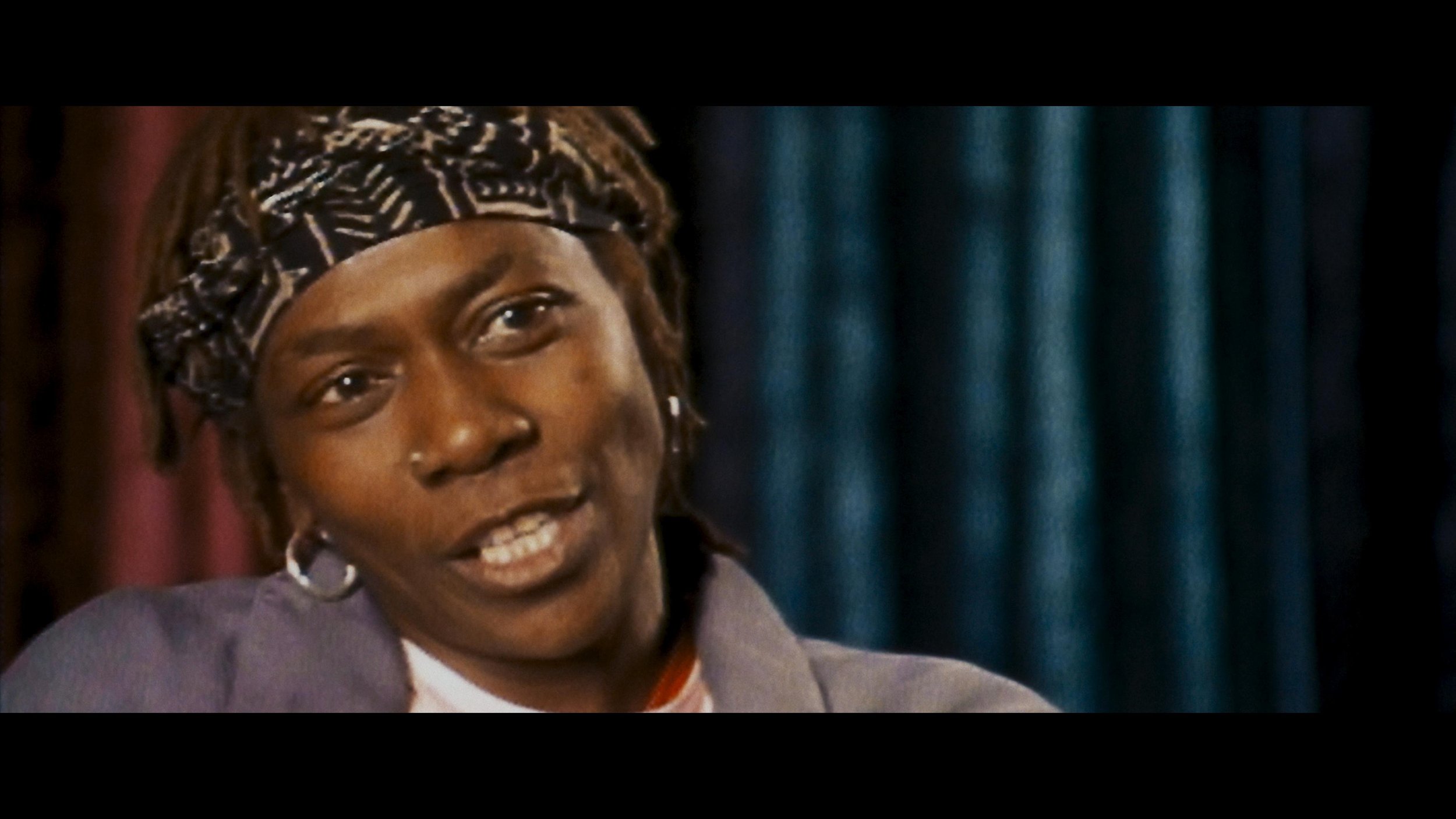

AI up-rez is like salt; a little is good, but too much ruins the dish. Don’t be afraid to let some pictures just look old.

Build a KEM reel of all interview setups. Having the A and B cam shots together in the timeline will help you reference grades quickly.

The first step was look development. Allen had already shot some of the interviews we used to refine the look. I built an LMT that had the cognac golden vibe. I used that look and the ACES standard outputs to create a 709 LUT for Avid media creation. Eric DeAzevedo was the operator responsible for many terabytes of dailies. We also normalized all the archival footage to 23.98 during the dailies step. Cortex was used to make the mxf files and bins. We had to double-hop to render in LiveGrain since it wasn’t supported in Cortex at the time.

Early on, we were still in the late stages of the COVID lockdown. I built a reel of every interview setup and had a ClearView session with Hughes and Josh Garcia (Producer). This scene was super critical to our success going forward. It set the bible for the show's look and ensured that Allen’s vision was consistent through the many days of shooting. At the start of each episode, I applied our base settings using a “Fuzzy” match. (yes, that is a real Baselight thing.) Basically, “Fuzzy” is a setting that allows the machine to match grades presumed to be from the same camera roll rather than a timecode approach. This put all the interviews 90% of the way there from the get-go. The next step was to sort the timeline by clip name and time of day. I would then work through a pass where I would track the shapes and balance out any inconsistencies in lighting as the sun hung lower throughout the day. The archival footage didn’t have as graceful of a strategy applied. Each shot was its own battle as the quality differed from source to source. My main goal was to ensure that it was cohesive and told the story Allen was crafting.

The first deliverable out of the gate was a theatrical version for the Toronto International Film Festival. I graded in ACES cc going out to PQ 1000nits. Then that was run through the DoVi analysis, and a P3D65 48nit version was trimmed. Finally, we applied a P3D65 to XYZ lut on the output render to create the DCDM.

The biggest challenge of this show was keeping up with editorial. As you can imagine, documentary storytelling is honed in the edit bay. The edit was constantly being updated as shots were cleared or discovered. Back at my shop, Leo Ferrini would constantly update my project to chase editorial. Multi-Paste (Remote Grades for our Resolve friends) was clutch in this situation. We took the old grades and copied them across. Leo would categorize the new material so I could sort the scene for the changes. The timelines constantly evolved and took shape until we got Allen in for the final grade. Allen has a great eye and religiously kept us in the world he had envisioned. We paid particular attention to eye-trace and ensured the information from each visual told a straightforward story without distraction. Next was a pass of Dolby trimming to take the approved PQ to 709. We would send that 709 file to Allen and get notes before creating the final IMF for delivery.

A super big thanks to Paul Lavoie for managing this one. There were many moving parts on this production but thanks to him, I rarely felt it. It’s a blessing to have a partner that doesn’t mind getting his hands dirty even though he’s one of the suits😜.

Be sure to check out this killer doc about one of our generation’s most prolific artists, told through Hughes's equally unparalleled artistic voice. Allen is a true master of many formats but has solidified his place as one of the best documentarians. Thanks for taking the time to peek behind the curtain, and let me know what you think.

Here are some more before and afters. Mellow yella’ Dan Muscarella would have been proud.